AI in dev tools, late 2024: where we are and where we might be headed

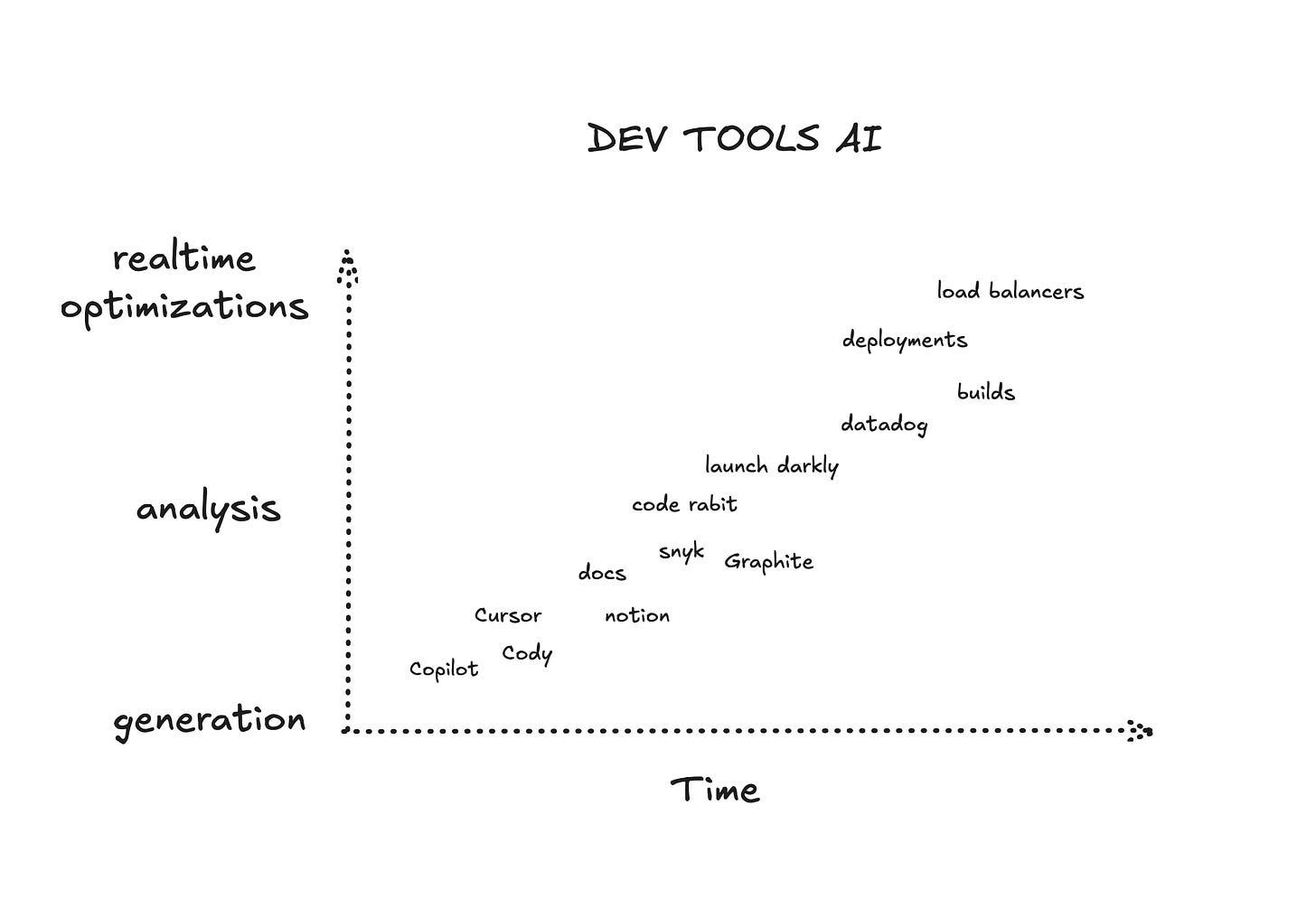

BLUF: AI in dev-tools started with code generation, is moving to analysis, and may eventually optimize graph-based operations like builds and deployments.

As I look across the current landscape of developer tools, it’s nearly impossible to ignore the rapidly growing presence of AI. I don’t believe this surge is just a passing fad or hollow marketing spin - there’s clearly something substantial underneath it all. Companies are experimenting tirelessly, layering AI capabilities onto existing products at breakneck speed. I see a massive collective attempt to figure out what really works, what developers actually want, and how AI can best integrate into our workflows. Of course, some attempts will inevitably fail. But many could become enduring parts of the modern software toolkit - as I think Copilot has.

Historically, when I think about how foundational dev tools emerged, they followed a pretty recognizable path. Innovations often originated in large companies or open-source communities, simmering quietly for months or years before finally turning into commercial products. For instance, GitHub emerged from the open-source Git ecosystem. PagerDuty was inspired by internal on-call practices at Amazon. Statsig took lessons from Facebook’s feature flagging infrastructure. This gradual, inside-out pattern gave solutions time to mature before they hit the mainstream.

But the AI era looks different. Because AI is so new and the ecosystem of existing dev tools so well established, companies don’t have the luxury of slowly incubating ideas internally. Instead, they’re racing to integrate AI wherever possible and see where it sticks. It’s a high-risk, high-reward strategy: those who get it right could define entirely new categories. Take GitHub Copilot, for example - it’s already soared to over a million paying users, a remarkable feat in such a short timeframe. Or consider how fast the market has rewarded companies like Cursor, recently reaching a 2.5 billion valuation and Codeium, hitting a 1.25 billion valuation. Even Jeff Bezos, reportedly returning to Amazon with renewed focus on AI, has described it as an enabling layer that will eventually be woven into everything we do. I share that sense - AI is not just a new tool; it’s a foundational capability that will eventually infiltrate the entire stack.

“AI is like electricity… It will be in everything” - Jeff Bezos

Three emerging patterns

As I survey the overall landscape, I’m noticing a few broad patterns in how AI is integrating into dev tools. These are, of course, rough generalizations, but they help frame the current state of play:

1. Crowded, competitive spaces

The first pattern is the intense competition in categories where AI-powered tools have already proven useful, especially in code generation. Codegen was a natural starting point because Large Language Models (LLMs) excel at producing text, and code is just a special kind of text.

Code generation and intelligent suggestion:

Tools like GitHub Copilot, Vercel’s V0, Cursor, Windsurfer by Codeium, AWS Q, and Cody by Sourcegraph all provide AI-driven code suggestions. They often integrate RAG (Retrieval Augmented Generation) techniques to pull relevant context from your codebase. From my vantage point, this space is becoming commoditized. It’s easy for me to switch tools if something else claims to be “smarter” this month. The result is a fierce race: each player is trying to outdo the other in terms of accuracy, speed, and integration depth. I’m curious to see who can differentiate themselves with unique features that actually matter to developers, rather than just riding a wave of novelty - though I suspect the space will remain fragmented.

2. Spaces with a few AI experiments (but growing quickly)

The second pattern I see is categories where AI has shown promise, but the ecosystem isn’t yet as crowded. These are areas where one or two early entrants are experimenting with what’s possible - pushing beyond generation into more nuanced tasks like reasoning about code, analyzing system health, or documenting complex infrastructure. A few examples:

Observability & monitoring:

Datadog is incorporating LLM-based insights into its observability platform, offering things like time-series forecasting and generative queries. The idea is that instead of sifting through endless metrics, I can ask an AI-driven layer to guide me toward root causes and patterns.

Search & documentation:

I’ve noticed tools like Notion integrating AI to help navigate internal knowledge bases and link code discussions with higher-level documentation. GitHub’s Copilot can also answer questions about your code directly. At the same time, Swimm and Docify use AI to generate and maintain documentation, reducing the friction of keeping docs up-to-date.

CI, review, and security analysis:

We’re starting to see AI applied to code reviews and continuous integration feedback loops, with tools like Code Rabbit and Graphite Reviewer offering AI-assisted insights. On the security front, Snyk, Checkmarx, and Semgrep are working on AI-driven vulnerability detection and linting. These analysis tasks are trickier because they aren’t just about producing code. They involve accurately interpreting large volumes of code and context, catching subtle risks, and minimizing false positives. I’m impressed by how quickly these AI systems have started to handle more complex reasoning tasks, even though there’s a long way to go.

Feature flags & database queries:

It’s fascinating to see AI reach into feature management and data analysis. LaunchDarkly is experimenting with AI-based strategies for implementing and managing feature flags. Meanwhile, data tools like PopSQL, Hex, and AskYourDatabase let you query and visualize databases more conversationally. All of this suggests AI can increasingly serve as a bridge between developers and the often arcane tools we rely on daily.

3. Spaces still barely touched by AI

Finally, there are areas of dev tooling that, at least for now, remain largely untouched by AI. Build systems, deployments, hosting, and other “heavy ops” processes have yet to feel the same wave of innovation. Why? These areas demand high performance, determinism, and an unwavering adherence to correctness. Builds must consistently produce identical outputs. Deployment pipelines have to be stable and predictable, not just “smart.” We’re dealing with complex DAGs (Directed Acyclic Graphs) for builds and tests, careful rollouts to production, and a need for absolute trust.

Injecting a probabilistic, reasoning-based layer into something like a build pipeline might seem like adding unnecessary risk. Yet I’m intrigued by what might happen if we can get comfortable with an AI that understands code at a deep level. Maybe it can identify which tests are likely to fail and skip unnecessary checks, speeding up the feedback loop. Maybe it can reorder builds to fail fast, saving time and compute resources. Maybe deployments can become more dynamic, with AIs monitoring code changes and production signals to roll out features more intelligently.

This is still speculative territory, though. I haven’t seen a mainstream tool that does all this reliably. But the notion is exciting: what if, a few years down the line, AI could meaningfully optimize these core operational pipelines?

A walk from generation to analysis to dynamic optimization

When I look at the path AI is taking through dev tools, I see a natural progression:

We started with generation, which was the easiest lift - just produce code snippets and suggestions. This is where the first success stories popped up, and where the market became crowded almost overnight.

Now, I’m seeing a shift toward analysis. This includes code review assistance, security vulnerability detection, documentation updates, feature flag strategies, and observability insights. AI is moving beyond just spitting out code and is starting to truly understand what it’s looking at. These tasks demand higher accuracy and more advanced reasoning. Hallucinations and false positives are real problems that must be actively mitigated.

The next frontier, and the highest “hanging fruit,” will be integrating AI into the most complex and deterministic parts of our toolchains - build-test-deploy-serve pipelines and their associated infrastructure. This would mean using AI not just to produce or interpret code, but to streamline, optimize, and orchestrate the entire software delivery cycle. If AI can confidently help me decide which tests to run, how to order them, and how to roll out changes in production, then it’s not just a neat trick anymore - it’s a transformative capability that touches every corner of the development process.

I believe that over the next decade, we’ll see more and more attempts to push AI into these heavy operational layers. Success here won’t come easy. It will require building trust, rigorously validating improvements, and ensuring that adding “intelligence” doesn’t compromise reliability. But if this vision comes to pass, AI will have completed a remarkable journey: starting from simple code suggestions to becoming a deeply integrated layer that optimizes how we build, test, deploy, and run software at scale.