I spend a lot of time thinking about stacked pull requests. As the founder of Graphite, I’ve seen how this developer workflow has grown more and more popular - originally popularized internally at Facebook, and now adopted by thousands of fast-moving teams. The idea is simple: instead of working on large, monolithic pull requests, you break changes into a series of small, incremental PRs that build on top of each other. It’s a method designed to help teams move quickly without sacrificing the quality and clarity that comes with smaller chunks of code.

At the same time, I’m watching with a mix of excitement and caution as a new trend emerges: AI-generated pull requests. Several companies are racing to build “self-driving PRs,” where autonomous systems read your entire codebase, understand outstanding tasks, and start submitting their own PRs - like tireless open-source contributors who never sleep and never lose focus. In theory, this could dramatically reduce the manual time developers spend writing boilerplate code, hunting down bugs, or implementing repetitive features.

What intrigues me is how these two trends - stacked PRs and AI-generated PRs - might intersect. How does the workflow of tomorrow look when we blend the human pattern of stacking with the robotic efficiency of machine-generated pull requests?

Why Smaller PRs Are Better

It’s widely accepted that small, focused PRs are the gold standard. They’re easier to review, catch fewer bugs, and integrate into production faster. Small PRs also reduce the risk of massive conflicts and make rollbacks painless when something unexpected happens in production.

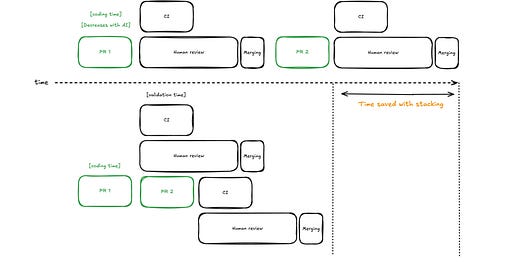

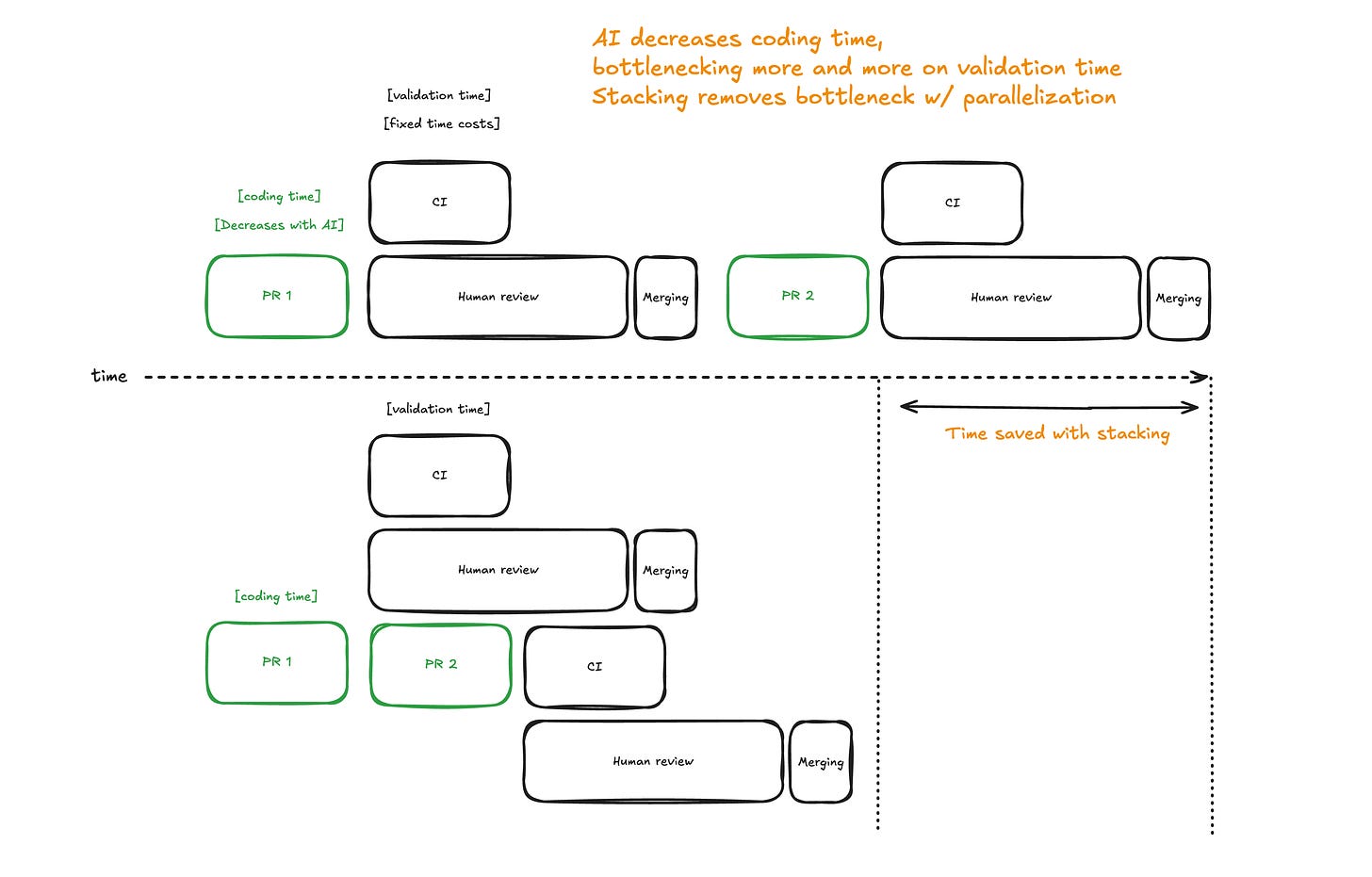

The challenge with small PRs is the overhead. After creating one, you typically wait: wait for continuous integration (CI) checks, wait for a code review, and then wait for a merge queue. If you worked linearly, you’d be stuck twiddling your thumbs every time you push a small change. That’s where stacking comes in. Instead of waiting around, you branch off an unmerged PR and keep going. You move in parallel with the review and CI cycles, accelerating your iteration without bloating the scope of any single PR.

This is incredibly liberating for teams that value speed. It creates a virtuous cycle: small PRs remain small because you never feel the need to stuff a bunch of extra changes in just to stay busy. Instead, you rely on stacking to keep moving forward, adding small increments of value to the codebase in quick succession.

Collaboration Through Stacks

Stacking also makes collaborative development more natural. Instead of two engineers editing the same file in real time - potentially creating confusion or conflicts - they coordinate through a chain of PRs. For example, one engineer might introduce a new API endpoint in the first PR, while another stacks a second PR that integrates it into the frontend. The result is a neat, linear sequence of changes that makes it easy to understand the evolution of the feature, roll back if needed, and track down when a particular bug was introduced.

A Perfect Match for AI-Generated PRs

Now, consider AI-driven systems slotting into this workflow. The advantages that humans gain from small, stacked PRs would matter even more to AI. If an AI model can propose a tiny improvement - a refactoring here, a style tweak there - it can do so rapidly and repeatedly. It won’t get bored or frustrated by waiting on feedback loops; it will just move on to the next incremental improvement.

But to maintain those rapid-fire cycles, you don’t want the AI sitting idle, waiting for PR #1 to get merged before starting on PR #2. As AI brings coding time to zero, validation steps become a bigger bottleneck. If you’re going to have an AI agent writing code continuously, it makes perfect sense to let it stack PRs on top of each other. While the first PR waits for CI and human review, the AI can already be working on the next unit of value. This parallelization aligns perfectly with the AI’s unending capacity for work.

In the same way that humans collaborate through stacks, AI can collaborate with humans - or even with other AI models - in a stacked environment. One model might be great at quickly drafting a new UI component, while another model specializes in performance optimizations. A human can start the chain, the AI can pick it up and continue, and another model (or a human) can finish the refinement. This layered approach lets everyone - human or machine - contribute within a structure that remains clear and incremental.

The Future: Agentic, Modular, and Fast

All engineering processes improve when we modularize, create dependency graphs, and parallelize work. Code changes are no exception. Stacked PRs provide a natural way to apply these principles to software development, and as we move toward a future of agentic, AI-driven code generation, the importance of stacking only grows.

Rather than viewing self-driving PRs as a separate paradigm, I see them as a natural extension of what we’re already doing: making PRs smaller, faster, and more collaborative. The synergy between stacked PRs and AI-generated PRs will define the cutting edge of software delivery - leading to codebases that evolve continuously, effortlessly, and intelligently.

In a world where code can be written at any time, from anywhere (including by non-human agents), the need to organize, structure, and control that flow of changes becomes paramount. Stacked PRs provide that structure. AI-generated PRs bring the raw horsepower. Together, they create a future where software development is both high-velocity and reliably stable - exactly the kind of environment we should be building toward.